What’s Missing from Financial AI Training Data and Why It Matters

Most AI systems labeled as “financial” are trained on reports and filings, not the invoices and transactions that actually move money.

Why Most Financial AI Models Are Trained on the Wrong Documents

Artificial intelligence in finance has made impressive progress. Large language models can summarize earnings calls, analyze SEC filings, and answer questions on financial reports.

But when these same models are applied to real enterprise finance workflows such as invoice processing, reconciliation, and audits, they often fail in surprising ways.

The reason is simple, but rarely stated clearly:

Most datasets labeled as “financial” contain very little data about actual financial transactions.

Enterprise finance teams run on transactions, and most “finance data” used to train AI isn’t actually financial in an operational sense. Until AI is trained on real transactional documents, it will struggle in AP, AR, and audit.

In this blog, we break down why that gap exists, how large it really is, and why it fundamentally limits today’s financial AI systems.

What Public Financial Document Corpora Consists Of

FinePDFs is a very large public dataset made by extracting text from PDFs found on the internet. It contains approximately 475 million PDFs (almost 3 trillion tokens), collected from websites across the web.

FinePDFs is excellent for teaching AI about:

How to read long PDFs

How to handle complex layouts

How to do OCR on scanned documents

It is built from Common Crawl, which means it only contains documents that are publicly available on the web, such as research papers, financial reports, whitepapers, government and policy PDFs, and legal filings. And this creates a serious blind spot.

It captures documents that talk about finance or business, or describe information, and are not used for daily finance operations. It rarely includes invoices, purchase orders (POs), remittance information, and internal bank statements.

If AI is trained heavily on FinePDFs, it learns how to read reports and papers very well, but it never learns how transactional documents actually look or work.

Even when we look beyond FinePDFs, public finance datasets still don’t contain the data needed for real enterprise finance automation.

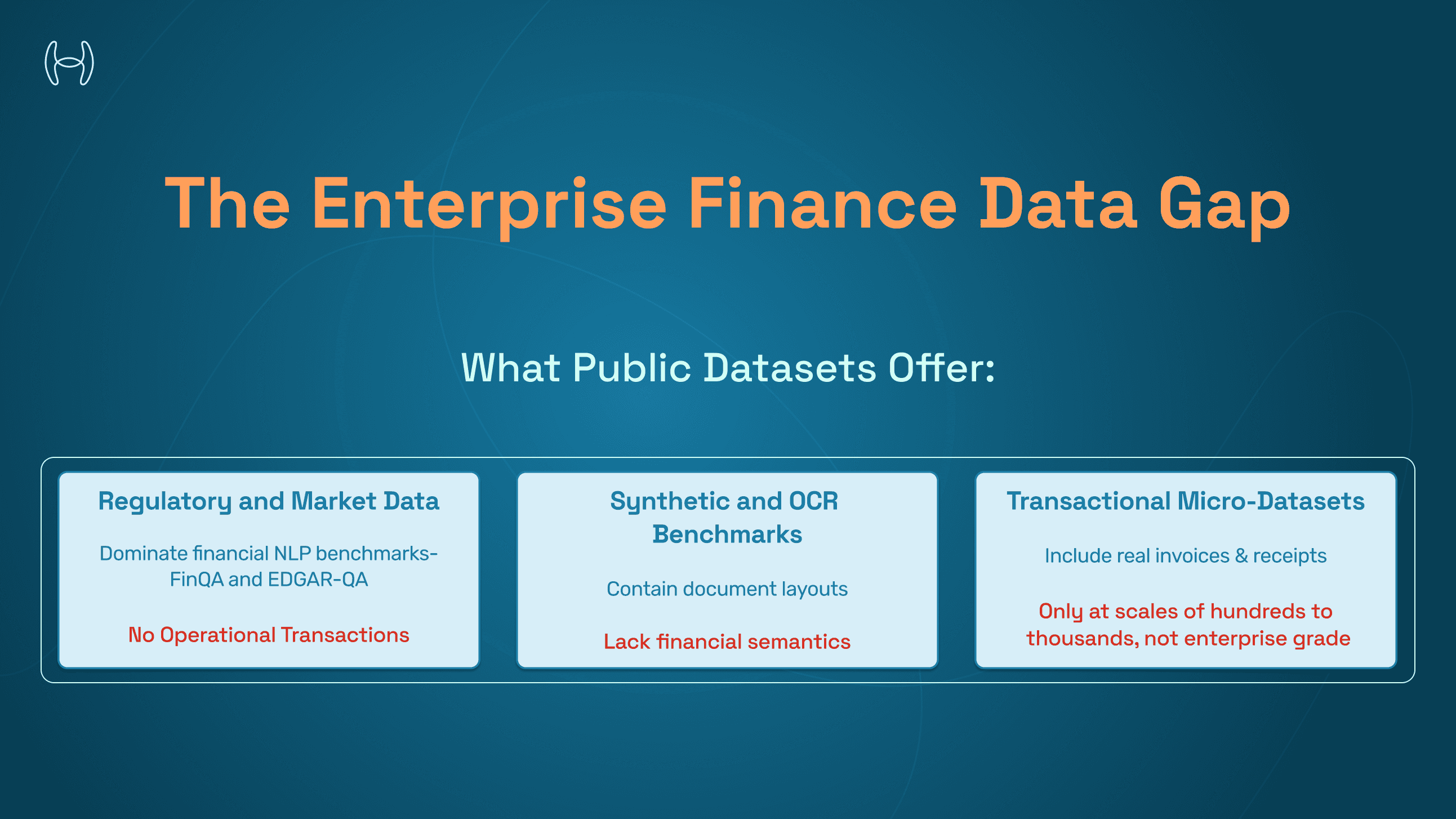

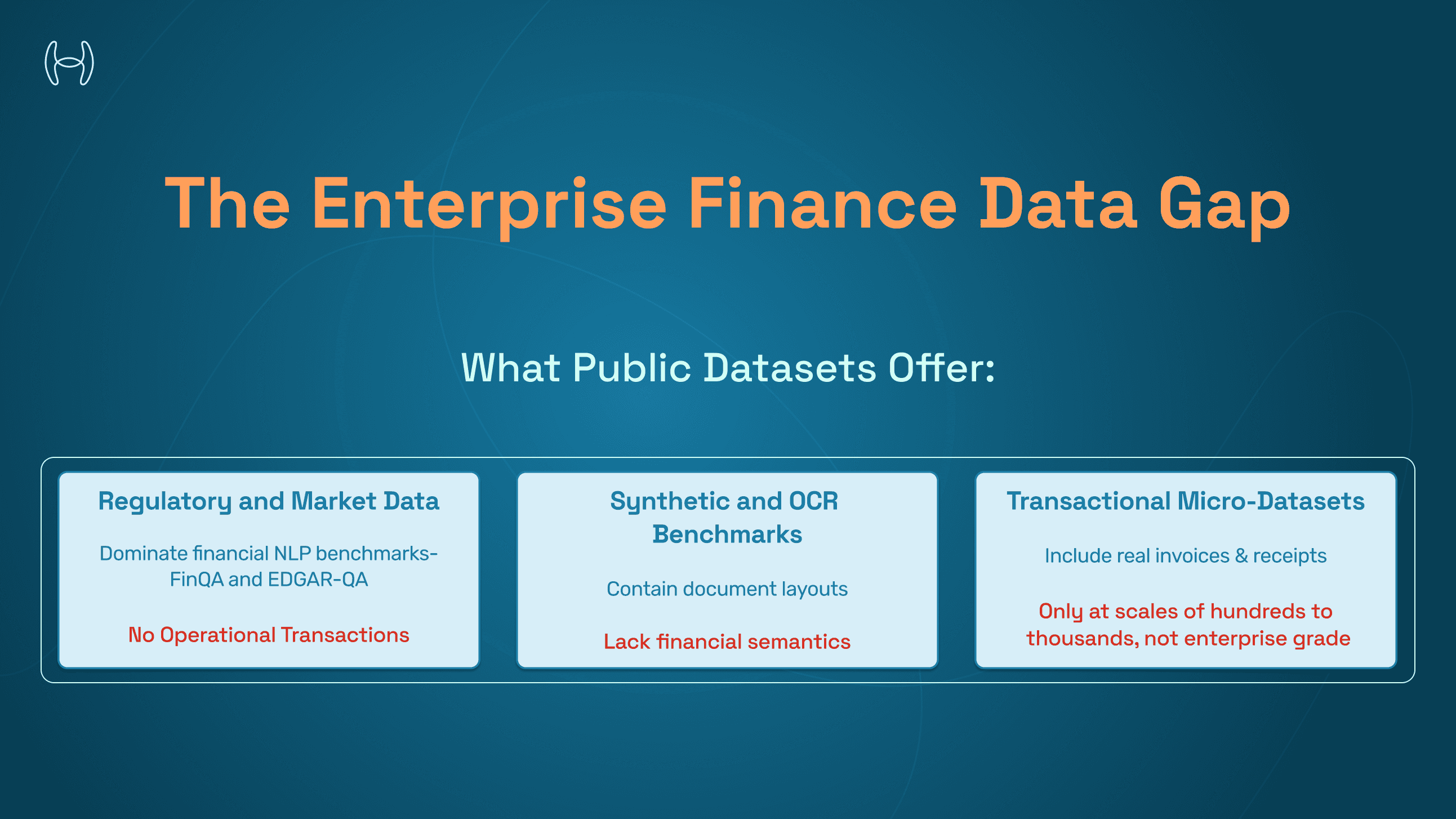

Other finance datasets include:

Regulatory and market data

SEC EDGAR filings (10-K, 10-Q, 8-K), earnings call transcripts, and prospectuses dominate financial NLPs benchmarks such as FinQA and EDGAR-QA, but contain no real operational transactions.

Synthetic and OCR Benchmarks

RVL-CDIP, DocBank, and DocVQA focus on document layouts, not financial semantics such as understanding prices, taxes, totals, or accounting logic.

Transactional Micro-Datasets

SROIE, CORD, and FUNSD come closest to real finance data-containing invoices and receipts, but are less in number, only at scales of hundreds to thousands. But enterprise finance operates at millions of invoices per year, thousands of vendors, and dozens of layouts per vendor.

Hence, public finance datasets either understand text, layout, or invoices, but never all three at enterprise scale.

The Research Finding: Measuring the Transactional Gap

To quantify this mismatch, we analyzed a finance-labeled corpus of 12.3 million multi-page PDFs (roughly 100B tokens) sampled from a much larger English-language document collection.

A random subset of ~400 documents was manually annotated into semantic categories based on document intent: reporting, regulatory, academic, contractual, or transactional.

The intent was understood by analyzing if the document:

Creates a financial obligation (e.g., an invoice)

Modifies one (e.g., a credit note)

Settles one (e.g., a payment or remittance).

If it doesn’t do one of these, it’s not operational finance, even if it’s “about money”.

What we found was striking:

Document Type | Share of Corpus |

Regulatory filings, financial statements, investor materials | ~36% |

Academic, market, and policy documents | ~45% |

Contracts, RFPs, procurement documents | ~11% |

Invoices, POs, transactional records | < 1% |

Despite being labeled “finance data,” 99% of the corpus does not directly represent financial transactions, even though it is labeled as “finance.”

Why Invoices Are Not Compatible With Generic AI Models

Invoices are structurally different from reports, and they are not clean tables with perfect numbers.

Real-world invoices include:

Vendor-specific layouts

10–50 line items

Implicit totals

Subtotals, taxes, shipping, discounts,

OCR noise: skew, stamps, handwriting, broken numerals

Models trained on EDGAR filings or FinePDFs are simply not exposed to these constraints.

Transactional Data Is So Hard to Find

Transactional documents like invoices and purchase orders are rare in public datasets for a simple reason: they were never meant to be public.

Unlike reports or filings, transactional PDFs are:

Generated inside ERP and accounting systems

Shared privately between buyers, vendors, and banks

Require database-level ground truth for validation

Because this information lives entirely inside enterprise systems, transactional documents are almost completely absent from the open web, leaving financial AI severely undertrained for real-world finance operations.

Financial Document Classification Is Not Just Text Classification

Classifying financial documents is often framed as a text task. Telling whether a document is an invoice, a contract, or a report is not just about reading the text.

It requires multiple kinds of reasoning at the same time:

Layout Reasoning

Party-Role inference (buyer vs seller)

Numeric consistency checks

Semantic Intent Detection

Hence, financial document classification is a multimodal reasoning problem, involving:

Vision - Understand layout, tables, and structure

Language - Read text, headings, and intent

Arithmetic - Validate numbers and totals

This is why finance-trained models can still:

Hallucinate totals

Mis-assign vendors

Break reconciliation logic

They were never trained on data where correctness depends on arithmetic and accounting consistency.

The Structural Mismatch: Reports vs Transactions

Dimension | Reports & Filings | Invoices & POs |

Layout variability | Low | Very high |

Numeric grounding | Low | Critical |

OCR noise | Low | High |

Label ambiguity | Low | High |

ERP alignment | None | Required |

LLMs are optimized for linguistic coherence, not transactional accuracy. Without explicit supervision on transactional documents, they fail in unpredictable and costly ways.

Why This Matters for AP & AR Teams

AP and AR teams depend on accuracy and speed. When AI isn’t trained on real transactional data, automation quickly breaks down.

Straight-through processing drops as invoices fail validation

Exceptions increase due to misread line items and totals

Manual reviews rise, negating productivity gains

Payment errors lead to delays and vendor issues

Confidence in automation erodes

This is why many “AI-powered” AP tools still rely heavily on human checks. AI can assist, but can’t be trusted without transactional intelligence.

Conclusion: Finance AI Must Be Transaction-First

Large datasets labeled as “finance” are overwhelmingly made up of non-transactional documents such as reports, filings, and research papers. This creates a fundamental blind spot for financial AI systems, because these documents do not reflect how finance actually operates inside enterprises.

Public datasets like FinePDFs are extremely valuable for document modeling. However, because they are sourced from publicly available PDFs, they fail to capture real transactional documents, such as invoices, purchase orders, and payment records, that determine how money moves.

As a result, today’s financial AI is well-trained to read and summarize financial information, but poorly equipped to process, validate, and reconcile transactions. This structural mismatch limits automation in Accounts Payable, Accounts Receivable, and audit workflows.

Bridging this gap will require a fundamental shift:

New datasets centered on real transactional documents

Representations that respect accounting structure

Training paradigms grounded in numerical and relational correctness

Until then, most “finance AI” will remain excellent at talking about money, and unreliable at moving it.